This work was performed under the auspices of the United States Atomic Energy Commission.

A historical account of the evolution of the Laurence Radiation Laboratory Livermore Octopus network is given. The evolution of the Octopus from a centralized network to a distributed one that consists of a super imposition of specialized, subnetworks is described. Each of the sub-networks perform specific network functions; they are interconnected but independent enough of one another so that failures in a part of the network do not cause catastrophic interruptions of overall network service.

INTRODUCTION

A major problem for networks where hosts are geographically separated and are separately controlled is the installation-to-installation relationship. It is difficult to establish and maintain common control languages among hosts. There are varying problems with common carriers. Differing installation capabilities can cause one installation to become overly dependent on another without con-concomitant control, or an installation might lose excessive capability to another installation, causing administrative or jurisdictional problems.

The hosts in the Octopus network are not geographically separate and they are under single administrative control. The control languages on the hosts are the same and there is no involvement with common carriers. Although accounting and security are of great concern to the Lawrence Radiation Laboratory (LRL), the approach to them is simplified because of the absence of a commercial environment and the strict physical security of all hardware and communication links. It is clear that the Octopus network would not be as advanced or useful if we had to first resolve many of the problems mentioned here.

The centralized control and well-defined environment of the Octopus network allows for highly stylized communication hardware and protocol which minimizes hand-shaking problems and balances the data rates among the hosts and the shared data base.

THE CLASSICAL OCTOPUS CONCEPT

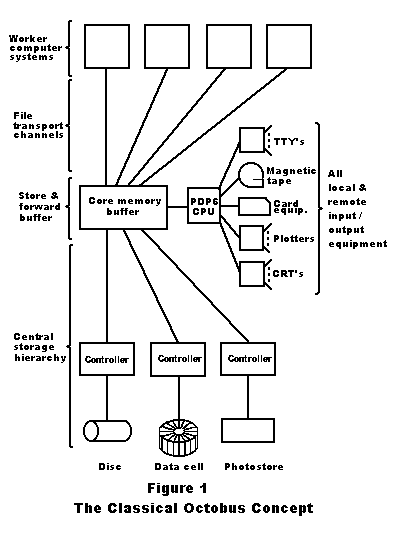

The current Octopus network was evolved from the original Octopus concept pictured in Figure 1. The original network consisted of a central system (PDP-6 and core memory buffer) with tentacles extending to worker (host) computer systems and various storage and I/O equipment. It was envisaged that this central system would manage the shared data base (Data Cell and Photostore), be a message concentrator for interactive terminals (TTY’s), and manage pools of local and remote I/O equipment. It was even considered possible for the central system to perform some load leveling. Most of the intended host computers at that time had differing batch operating systems so load leveling would have involved some difficult conversion problems.

The current Octopus network was evolved from the original Octopus concept pictured in Figure 1. The original network consisted of a central system (PDP-6 and core memory buffer) with tentacles extending to worker (host) computer systems and various storage and I/O equipment. It was envisaged that this central system would manage the shared data base (Data Cell and Photostore), be a message concentrator for interactive terminals (TTY’s), and manage pools of local and remote I/O equipment. It was even considered possible for the central system to perform some load leveling. Most of the intended host computers at that time had differing batch operating systems so load leveling would have involved some difficult conversion problems.

Two major problems with this system became apparent about two years ago. The usefulness of the hosts, the data base, the interactive terminals etc., was totally dependent on the reliability of the central (PDP-6) system. If the central CPU or memory failed (often at times) there was no network at all. If a channel between a host and PDP-6 failed the host was isolated simultaneously from the data base, user terminals, and remote I/O.

Fortunately, only the TTY, data base parts of this network were implemented. The persistence and variety of hardware and software problems in this partial system showed clearly a fundamental weakness of a centralized network and the need for a distributed architecture.

The second major problem in the centralized network is the maintenance of reasonable stability and reliability in software and hardware while modifying, expanding and improving the system. Generally, reliability is achieved when things are left alone. Under pressure to expand the capability of a centralized network, software and hardware people must change what already works. In the centralized system all parts of the network were very interdependent and changes to accommodate a new capability caused perturbations in the entire network.

THE CURRENT OCTOPUS NETWORK

It was decided about two years ago to decentralize the LRL network. Each major function of the network, i.e., remote terminal (TTY) system, shared data base, remote job entry terminals (RJET), was to be a separate sub-network as independent from the others as possible.

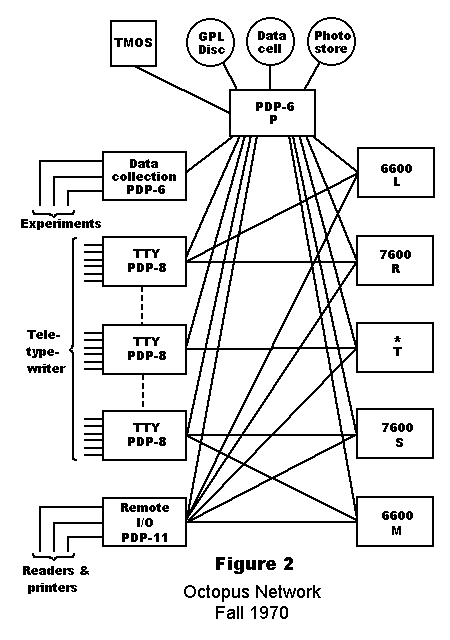

Figure 2 shows the current state of the network except that the remote I/O (RJET) is not fully implemented nor has the *T machine arrived yet. This design has been successful in achieving independence of network services, greater stability in the TTY’s, and a marked decrease in overall network failure due to change and expansion.

Figure 2 shows the current state of the network except that the remote I/O (RJET) is not fully implemented nor has the *T machine arrived yet. This design has been successful in achieving independence of network services, greater stability in the TTY’s, and a marked decrease in overall network failure due to change and expansion.

Each of the sub-networks is controlled by its own computer (called a concentrator). In the case of the TTY sub-network, there are three independent concentrators providing almost continuous service of most of the available TTY’s.

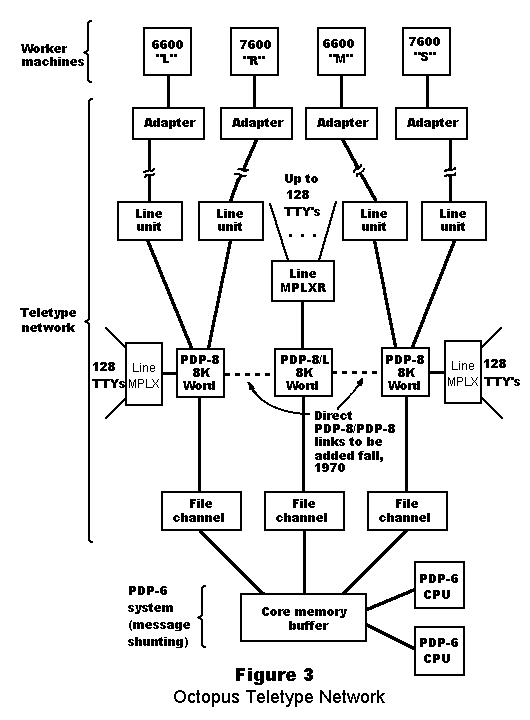

Each of the PDP-8’s can have up to 128 TTY’s connected to itself. There are now about 350 TTY’s in the network. Any TTY on any PDP-8 can be connected to any host machine or to the PDP-6. Due to a lack of ports into hosts, each PDP-8 does not connect directly to all hosts. Currently a message from an upper PDP-8 TTY to the 6600-M host is shunted through the PDP-6 via the upper PDP-8 to PDP-6 connection and then through the PDP-6 to the 6600-M through the other PDP-8. The two PDP-8 to PDP-8 channels shown in Figure 2 exist and software to handle them is being written. The PDP-8 to PDP-8 connections should make the TTY sub-network independent of the PDP-6 system. The PDP-8’s are 8K 12-bit-per-word systems. 4K is used for message assembly and disassembly buffers. The TTY’s connect to a multiplexor that converts from serial to parallel and vice versa for up to 128 full duplex teletype writers. The PDP-8 controls the multiplexor, packs and unpacks characters into or out of message buffers, and routes the messages. These PDP-8 systems are efficient, relatively low-cost, highly specialized hardware which perform bit manipulation functions that would be costly and cumbersome in a host. The TTY subnetwork is shown in more detail in Figure 3. The PDP-6 system is still used for message shunting until the PDP-8/PDP-8 links become operational, at which time the PDP-6 will be used only for backup routing.

The PDP-8’s are 8K 12-bit-per-word systems. 4K is used for message assembly and disassembly buffers. The TTY’s connect to a multiplexor that converts from serial to parallel and vice versa for up to 128 full duplex teletype writers. The PDP-8 controls the multiplexor, packs and unpacks characters into or out of message buffers, and routes the messages. These PDP-8 systems are efficient, relatively low-cost, highly specialized hardware which perform bit manipulation functions that would be costly and cumbersome in a host. The TTY subnetwork is shown in more detail in Figure 3. The PDP-6 system is still used for message shunting until the PDP-8/PDP-8 links become operational, at which time the PDP-6 will be used only for backup routing.

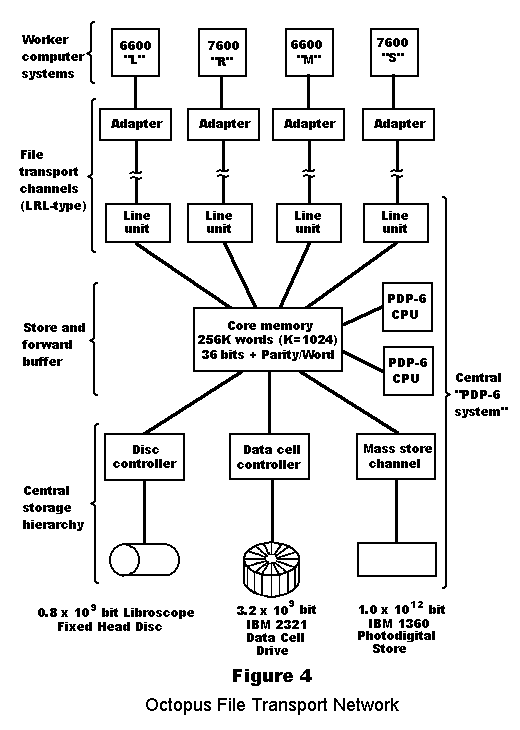

The prime function of the PDP-6 sub-system is the management and maintenance of the filing system for the network shared data base. The shared data base hardware is comprised of some core memory buffers, a PDP-6 CPU, a fixed head disc, an IBM Data Cell, and an IBM Photodigital Store. The fixed head disc is used to hold active user directories and as a buffer to assemble files coming into or going out of the data base. This buffering smoothes the disparity between the high data rates of the host to PDP-6 path and the low data rate of the PDP-6 to storage device path.

Figure 4 shows the file transport sub-network (shared data base). The data rates between the hosts and the PDP-6 are about 10 million bps. The LRL Data Channel widths are 36 bits for the 6600 and 12 bits for the 7600. Although the burst data rate of this sytem is quite high the overall average rate is about 3 million bps due to hand shaking, setup, bookkeeping, and the approximately 1.25 million bps average rate of the Photostore.

Figure 4 shows the file transport sub-network (shared data base). The data rates between the hosts and the PDP-6 are about 10 million bps. The LRL Data Channel widths are 36 bits for the 6600 and 12 bits for the 7600. Although the burst data rate of this sytem is quite high the overall average rate is about 3 million bps due to hand shaking, setup, bookkeeping, and the approximately 1.25 million bps average rate of the Photostore.

Data may be moved from data base to host, host to data base, or host to host. The basic units of data in the system are files of varying length. The movement of a file between host and data base may take more than one transaction. The maximum size of a single transaction is about 63K 36-bit words. Complete files are assembled on the fixed head disc at the halfway point of any transfer and remain there for as long as it takes to complete the entire transfer. Hence, it is possible for a user to transfer a file to the Photostore and retrieve it from the disc before it has been written on the Photostore.

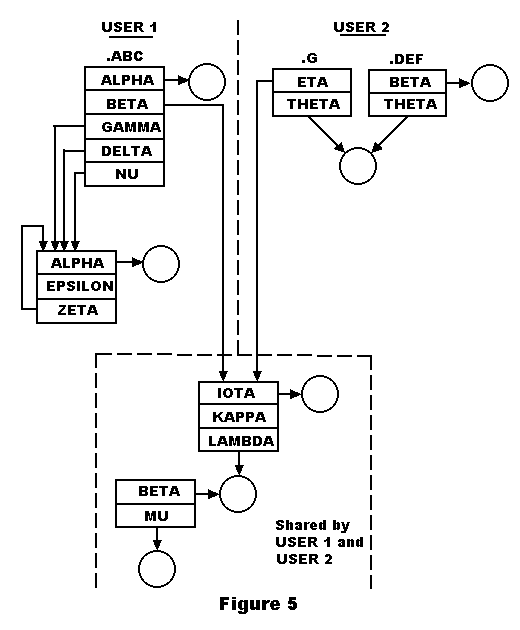

Management of data within the shared data base is accomplished by the ELEPHANT system within the PDP-6. An elaborate and versatile scheme of linked and shared directories is available to the user to organize and catalogue his files. Figure 5 shows some of the capabilities of the ELEPHANT and the kinds of sharing that is possible among users.

Named boxes are directory or file names. A name which points to a circle (representing an actual file of data) is a file name. The decimal point followed by alphanumerics is a root directory name, i.e., the beginning of a directory chain. Some interesting possibilities can be noticed in Figure 5. User 1 has two files named ALPHA. One may be referenced as .ABC ALPHA and the other as .ABC GAMMA ALPHA. The same file THETA can be accessed from either of USER 2’s directories. USER 1 and USER 2 share files IOTA, LAMBDA, and MU by establishing pointers to a common directory. Finally, a single file may be known by more than one name as is the case of LAMBDA or BETA in the shared area.

Named boxes are directory or file names. A name which points to a circle (representing an actual file of data) is a file name. The decimal point followed by alphanumerics is a root directory name, i.e., the beginning of a directory chain. Some interesting possibilities can be noticed in Figure 5. User 1 has two files named ALPHA. One may be referenced as .ABC ALPHA and the other as .ABC GAMMA ALPHA. The same file THETA can be accessed from either of USER 2’s directories. USER 1 and USER 2 share files IOTA, LAMBDA, and MU by establishing pointers to a common directory. Finally, a single file may be known by more than one name as is the case of LAMBDA or BETA in the shared area.

Data transfers among hosts and data base are usually initiated by users logged into host systems. The same control language for file transport exists on all hosts. The basic set of verbs available are READ or WRITE STORAGE (RDS, WRS), READ or WRITE COMPUTER (RDC, WRC), CREATE DIRECTORY (CRD) ABORT (ABT) etc. Commands from host to PDP-6 and responses from PDP-6 to host are transmitted through the PDP-8 TTY network in small message packets. Actual data transfers are started and controlled by the PDP-6 system in accordance with its priorities.

The ELEPHANT system determines where to record a file in the data base depending on whether it is specified to be a long-term or a medium-term file by the user. Long-term files are recorded in the photostore (a write once device) and medium-term files are recorded in the data cell (a read-write device). There is currently a 10 day purge time for non-used files in the data cell; all user directories also reside in the data cell but are not subject to purge.

The PDP-6 system also drives a television monitor display system (TMDS) and a data collection system. The TMDS system consists of a 16 track fixed head disc representing 16 channels fanning out through a programmable switch with 64 coax lines each connected to a monitor. TMDS is an experimental system which has provided valuable experience and ground work which will be helpful in the design and implementation of future LRL Keyboard Display Systems. Although it is not highly interactive, the TMDS has been very useful for users with a need to rapidly scan alphanumeric and graphical data. Scanning of soft copy long before any hard copy can be produced has greatly improved the turn-around time for some users.

EXPANSION OF THE OCTOPUS

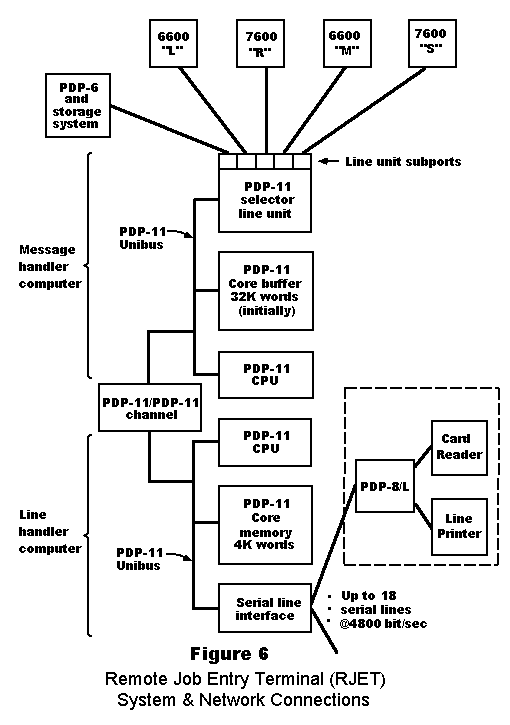

By Fall 1971, a remote job entry terminal (RJET) sub-network should be operational. This sytem will have two PDP-ll’s functioning as a concentrator to control the movement of card and print files to and from the host computers and remote printers and card readers. One PDP-11 will be connected to all hosts and the PDP-6 through a selector line unit Figure 6. It will have about a 32K of 16-bit memory into which it will assemble bursts of data into appropriate message blocks for routing to a host or to the other PDP-11. The second PDP-11 will assemble and disassemble data moving over serial lines (twisted pair) to and from up to 18 remote PDP-8’s which will each drive a card reader and printer.

By Fall 1971, a remote job entry terminal (RJET) sub-network should be operational. This sytem will have two PDP-ll’s functioning as a concentrator to control the movement of card and print files to and from the host computers and remote printers and card readers. One PDP-11 will be connected to all hosts and the PDP-6 through a selector line unit Figure 6. It will have about a 32K of 16-bit memory into which it will assemble bursts of data into appropriate message blocks for routing to a host or to the other PDP-11. The second PDP-11 will assemble and disassemble data moving over serial lines (twisted pair) to and from up to 18 remote PDP-8’s which will each drive a card reader and printer.

The basic remote station will have a 600 line per minute printer and a 300 card per minute reader. However, it is envisaged that non-standard remote stations may be implemented to drive other equipment or do data collection from experiments.

LRL is currently studying possibilities for a sub-network of Keyboard Interactive Displays (KIDS) as an augmentation and partial replacement for the TTY capability. Some of the attributes being considered for a KIDS terminal are an 8-bit 256 character (192 graphics) keyboard, a display with capability at least a thousand alphanumerics and graphical capability of a few hundred vector inches. APL graphics, scrolling, and cursor capability are considered necessary for this system.

The design of the KIDS sub-network will be similar to the others. It will have a concentrator computer connected to all hosts. However, in addition to standard concentrator functions of data assembly and buffering, it will provide some text editing services which should reduce traffic between itself and the hosts. It is expected that this system will reduce the volume of hard copy needed, improve user efficiency and turn-around time, and satisfy most of the interactive graphics needs of LRL short of that which requires very high performance graphics equipment.

CONCLUSION

The original centralized LRL Octopus network was abandoned in favor of an almost fully connected distributed network. The network consists of a superimposition of sub-networks, each independent of the other, performing a specific network function.

Most of the shortcomings of the original Octopus have been overcome in the new architecture. It is seldom that there are no hosts or no interactive terminals operable. The file transport system runs better and longer than it ever has. Some of this improvement is attributable to the simplification and stability introduced by removal from the PDP-6 system of network functions not related to file transport.

The latest network exhibits reasonable stability and reliability in an environment of constant change and expansion. Network services to users have been more than adequate while I/O and terminal capability is being expanded. It is expected that this network will survive well the constant evolution and changes necessitated by the elimination and addition of hosts, I/O services and terminal systems.

QUESTION PERIOD

Q: Why do you have direct connections to the 6600-L and the 7600-R when you never use them?

A: The direct connection from a PDP-8 to host is used when the user is at a TTY connected to that PDP-8. The routing of a message from a PDP-8 to the PDP-6 to another PDP-8 occurs only when the first PDP-8 is not directly connected to the host the user is trying to talk to.

Q: If a PDP-8 goes down what happens to the lines that are attached to that PDP-8. Are they automatically out?

A: Yes. But the PDP-8’s rarely fail and when one does, a TTY connected to a different PDP-8 is usually available for most users.

Q: Was there any thought given to making TTY’s dialable to different PDP-8 ‘s as opposed to hard connection to a given PDP-8?

A: No. The PDP-8’s are very reliable and the PDP-8 to PDP-8 channels should elminate the currently small problem of routing through the PDP-6.

Q: Do each of the 6600 ‘s and 7600 have their own disc, etc.?

A: Yes. Each of the hosts have disc, tape, card reader and, in the case of the 7600’s, drum systems local to themselves.

Q: Apart from the fact that two are 6600’s and two are 7600’s, how heterogeneous are these computers. Does the user care which one he gets onto?

A: Some jobs can be run only on a 7600 and some only on a 6600 because of some differences in hardware facilities, i.e. large core memory, size of central core, etc. A users’ computer time allocation will determine where he runs. The work load may influence where the user runs. Generally, there is no network load leveling. The user determines finally where he runs subject to the kinds of constraints mentioned above.

Q: As I understand you, your original centralized Octopus had problems of reliability and stability but it was functionally adequate when it was working. If that’s so, why couldn’t you have duplexed the PDP-6?

A: There are two PDP-6 CPU’s. Unfortunately, there were problems throughout (interfaces, line units, memories, etc.). It could not all be duplexed.

This paper appeared in COMPUTER NETWORKS edited by Randall Rustin ©1972 Prentice-Hall Inc. Englewood Cliffs, N.J. pp 95-100. The oral presentation was in Nov 30 – Dec 1, 1970 at the Courant Institute of NYU.

Samuel F. Mendicino

Lawrence Radiation Laboratory